notebooks/pipcook_image_classification.ipynb (256 lines of code) (raw):

{

"nbformat": 4,

"nbformat_minor": 2,

"metadata": {

"colab": {

"name": "pipcook_image_classification.ipynb",

"provenance": [],

"collapsed_sections": [],

"toc_visible": true

},

"kernelspec": {

"name": "python3",

"display_name": "Python 3"

},

"accelerator": "GPU"

},

"cells": [

{

"cell_type": "markdown",

"source": [

"## Environment Initialization\n",

"This cell is used to initlialize necessary environments for pipcook to run, including Node.js 12.x."

],

"metadata": {

"id": "lJtMrk5VGYMn"

}

},

{

"cell_type": "code",

"execution_count": null,

"source": [

"!wget -P /tmp https://nodejs.org/dist/v12.19.0/node-v12.19.0-linux-x64.tar.xz\n",

"!rm -rf /usr/local/lib/nodejs\n",

"!mkdir -p /usr/local/lib/nodejs\n",

"!tar -xJf /tmp/node-v12.19.0-linux-x64.tar.xz -C /usr/local/lib/nodejs\n",

"!sh -c 'echo \"export PATH=/usr/local/lib/nodejs/node-v12.19.0-linux-x64/bin:\\$PATH\" >> /etc/profile'\n",

"!rm -f /usr/bin/node\n",

"!rm -f /usr/bin/npm\n",

"!ln -s /usr/local/lib/nodejs/node-v12.19.0-linux-x64/bin/node /usr/bin/node\n",

"!ln -s /usr/local/lib/nodejs/node-v12.19.0-linux-x64/bin/npm /usr/bin/npm\n",

"!npm config delete registry\n",

"\n",

"import os\n",

"PATH_ENV = os.environ['PATH']\n",

"%env PATH=/usr/local/lib/nodejs/node-v12.19.0-linux-x64/bin:${PATH_ENV}"

],

"outputs": [],

"metadata": {

"id": "xIxSfN0KaMO_"

}

},

{

"cell_type": "markdown",

"source": [

"## install pipcook cli tool\n",

"pipcook-cli is the cli tool for pipcook for any operations later, including installing pipcook, run pipcook jobs and checking logs."

],

"metadata": {

"id": "3ZDRzj2BGq8x"

}

},

{

"cell_type": "code",

"execution_count": null,

"source": [

"!npm install @pipcook/cli -g\n",

"!rm -f /usr/bin/pipcook\n",

"!ln -s /usr/local/lib/nodejs/node-v12.19.0-linux-x64/bin/pipcook /usr/bin/pipcook"

],

"outputs": [],

"metadata": {

"id": "kMeOA1CibuyF"

}

},

{

"cell_type": "markdown",

"source": [

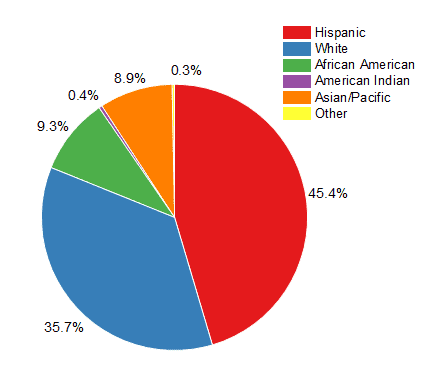

"# Classify images of UI components\n",

"\n",

"## Background\n",

"\n",

"Have you encountered such a scenario in the front-end business: there are some images in your hand, and you want an automatic way to identify what front-end components these images are, whether it is a button, a navigation bar, or a form? This is a typical image classification task.\n",

"\n",

"> The task of predicting image categories is called image classification. The purpose of training the image classification model is to identify various types of images.\n",

"\n",

"This identification is very useful. You can use this identification information for code generation or automated testing.\n",

"\n",

"Taking code generation as an example, suppose we have a sketch design draft and the entire design draft is composed of different components. We can traverse the layers of the entire design draft. For each layer, use the model of image classification to identify what component each layer is. After that, we can replace the original design draft layer with the front-end component to generate the front-end code.\n",

"\n",

"Another example is in the scenario of automated testing. We need an ability to identify the type of each layer. For the button that is recognized, we can automatically click to see if the button works. For the list component that we recognize, we can automatically track loading speed to monitor performance, etc.\n",

"\n",

"## Examples\n",

"\n",

"For example, in the scenario where the forms are automatically generated, we need to identify which components are column charts or pie charts, as shown in the following figure:\n",

"\n",

"\n",

"\n",

" \n",

"\n",

"After the training is completed, for each picture, the model will eventually give us the prediction results we want. For example, when we enter the line chart of Figure 1, the model will give prediction results similar to the following:\n",

"\n",

"```\n",

"[[0.1, 0.9]]\n",

"```\n",

"\n",

"At the same time, we will generate a labelmap during training. Labelmap is a mapping relationship between the serial number and the actual type. This generation is mainly due to the fact that our classification name is text, but before entering the model, we need to convert the text Into numbers. Here is a labelmap:\n",

"\n",

"```json\n",

"{\n",

" \"column\": 0,\n",

" \"pie\": 1,\n",

"}\n",

"```\n",

"\n",

"First, why is the prediction result a two-dimensional array? First of all, the model allows prediction of multiple pictures at once. For each picture, the model will also give an array, this array describes the possibility of each classification, as shown in the labelmap, the classification is arranged in the order of column chart and pie chart, then corresponding to the prediction result of the model, We can see that the column chart has the highest confidence, which is 0.9, so this picture is predicted to be a column chart, that is, the prediction is correct.\n",

"\n",

"## Data Preparation\n",

"\n",

"When we are doing image classification tasks similar to this one, we need to organize our dataset in a certain format.\n",

"\n",

"We need to divide our dataset into a training set (train), a validation set (validation) and a test set (test) according to a certain proportion. Among them, the training set is mainly used to train the model, and the validation set and the test set are used to evaluate the model. The validation set is mainly used to evaluate the model during the training process to facilitate viewing of the model's overfitting and convergence. The test set is used to perform an overall evaluation of the model after all training is completed.\n",

"\n",

"In the training/validation/test set, we will organize the data according to the classification category. For example, we now have two categories, line and ring, then we can create two folders for these two category names, in the corresponding Place pictures under the folder. The overall directory structure is:\n",

"\n",

"- train\n",

" - ring\n",

" - xx.jpg\n",

" - ...\n",

" - line\n",

" - xxjpg\n",

" - ...\n",

" - column\n",

" - ...\n",

" - pie\n",

" - ...\n",

"- validation\n",

" - ring\n",

" - xx.jpg\n",

" - ...\n",

" - line\n",

" - xx.jpg\n",

" - ...\n",

" - column\n",

" - ...\n",

" - pie\n",

" - ...\n",

"- test\n",

" - ring\n",

" - xx.jpg\n",

" - ...\n",

" - line\n",

" - xx.jpg\n",

" - ...\n",

" - column\n",

" - ...\n",

" - pie\n",

" - ...\n",

"\n",

"We have prepared such a dataset, you can download it and check it out:[Download here](http://ai-sample.oss-cn-hangzhou.aliyuncs.com/pipcook/datasets/component-recognition-image-classification/component-recognition-classification.zip).\n",

"\n",

"## Start Training\n",

"\n",

"After the dataset is ready, we can start training. Using Pipcook can be very convenient for the training of image classification. You only need to build the following pipeline:\n",

"```json\n",

"{\n",

" \"specVersion\": \"2.0\",\n",

" \"datasource\": \"https://cdn.jsdelivr.net/gh/imgcook/pipcook-script@9d210de/scripts/image-classification-mobilenet/build/datasource.js?url=http://ai-sample.oss-cn-hangzhou.aliyuncs.com/pipcook/datasets/component-recognition-image-classification/component-recognition-classification.zip\",\n",

" \"dataflow\": [\n",

" \"https://cdn.jsdelivr.net/gh/imgcook/pipcook-script@9d210de/scripts/image-classification-mobilenet/build/dataflow.js?size=224&size=224\"\n",

" ],\n",

" \"model\": \"https://cdn.jsdelivr.net/gh/imgcook/pipcook-script@9d210de/scripts/image-classification-mobilenet/build/model.js\",\n",

" \"artifact\": [{\n",

" \"processor\": \"pipcook-artifact-zip@0.0.2\",\n",

" \"target\": \"/tmp/mobilenet-model.zip\"\n",

" }],\n",

" \"options\": {\n",

" \"framework\": \"tfjs@3.8\",\n",

" \"train\": {\n",

" \"epochs\": 15,\n",

" \"validationRequired\": true\n",

" }\n",

" }\n",

"}\n",

"\n",

"```\n",

"Through the above scripts, we can see that they are used separately:\n",

"\n",

"1. **datasource** This script is used to download the dataset that meets the image classification described above. Mainly, we need to provide the url parameter, and we provide the dataset address that we prepared above\n",

"2. **dataflow** When performing image classification, we need to have some necessary operations on the original data. For example, image classification requires that all pictures are of the same size, so we use this script to resize the pictures to a uniform size\n",

"3. **model** We use this script to define, train and evaluate and save the model.\n",

"\n",

"[mobilenet](https://arxiv.org/abs/1704.04861) is a lightweight model which can be trained on CPU. If you are using [resnet](https://arxiv.org/abs/1512.03385),since the model is quite large, we recommend use to train on GPU. \n",

"\n",

"> CUDA, short for Compute Unified Device Architecture, is a parallel computing platform and programming model founded by NVIDIA based on the GPUs (Graphics Processing Units, which can be popularly understood as graphics cards).\n",

"\n",

"> With CUDA, GPUs can be conveniently used for general purpose calculations (a bit like numerical calculations performed in the CPU, etc.). Before CUDA, GPUs were generally only used for graphics rendering (such as through OpenGL, DirectX).\n",

"\n",

"Now let's run our image-classification job!"

],

"metadata": {

"id": "64NgxpWhHcXK"

}

},

{

"cell_type": "code",

"execution_count": null,

"source": [

"!sudo pipcook train https://raw.githubusercontent.com/alibaba/pipcook/main/example/pipelines/image-classification-resnet.json -o my-pipcook"

],

"outputs": [],

"metadata": {

"id": "KMNkCyFQeEml"

}

},

{

"cell_type": "markdown",

"source": [

"Often the model will converge at 10-20 epochs. Of course, it depends on the complexity of your dataset. Model convergence means that the loss (loss value) is low enough and the accuracy is high enough.\n",

"\n",

"After the training is completed, output will be generated in the `my-pipcook` directory, which is a pipcook workspace.\n",

"\n",

"Now we can predict. You can just have a try on code below to predict the image we provide. You can replace the image url with your own url to try on your own dataset. The predict result is in form of probablity of each category \n",

"as we have explained before."

],

"metadata": {

"id": "UCz1sbHRH1IZ"

}

},

{

"cell_type": "code",

"execution_count": null,

"source": [

"!wget https://img.alicdn.com/tfs/TB1ekuMhQY2gK0jSZFgXXc5OFXa-400-400.jpg -O sample.jpg\n",

"!sudo pipcook predict ./my-pipcook -s ./sample.jpg"

],

"outputs": [],

"metadata": {

"id": "OGWgMXDBf_7g"

}

},

{

"cell_type": "markdown",

"source": [

"\n",

"Note that the prediction result we give is the probability of each category. You can process this probability to the result you want.\n",

"\n",

"## Conclusion\n",

"\n",

"In this way, the component recognition task based on the image classification model is completed. After completing the pipeline in our example, if you are interested in such tasks, you can also start preparing your own dataset for training. We have already introduced the format of the dataset in detail in the data preparation chapter. You only need to follow the file directory to easily prepare the data that matches our image classification pipeline."

],

"metadata": {

"id": "DkyErWesELTg"

}

}

]

}