notebooks/zh-CN/automatic_embedding_tei_inference_endpoints.ipynb (826 lines of code) (raw):

{

"cells": [

{

"cell_type": "markdown",

"id": "5d9aca72-957a-4ee2-862f-e011b9cd3a62",

"metadata": {},

"source": [

"# 怎么使用推理端点去嵌入文档\n",

"\n",

"_作者: [Derek Thomas](https://huggingface.co/derek-thomas)_\n",

"\n",

"## 目标\n",

"\n",

"我有一个数据集,我想为其嵌入语义搜索(或问答,或 RAG),我希望以最简单的方式嵌入这个数据集并将其放入一个新的数据集中。\n",

"\n",

"## 方法\n",

"\n",

"我将使用我最喜欢的 subreddit [r/bestofredditorupdates](https://www.reddit.com/r/bestofredditorupdates/) 中的数据集。因为它有很长的条目,同时使用新的 [jinaai/jina-embeddings-v2-base-en](https://huggingface.co/jinaai/jina-embeddings-v2-base-en) 嵌入模型,因为它有 8k 的上下文长度。还将使用 [推理端点](https://huggingface.co/inference-endpoints) 部署这个,以节省时间和金钱。要跟随这个教程,你需要**已经添加了支付方式**。如果你还没有添加,可以在 [账单](https://huggingface.co/docs/hub/billing#billing) 中添加。为了使操作更加简单,我将完全基于 API 进行操作。\n",

"\n",

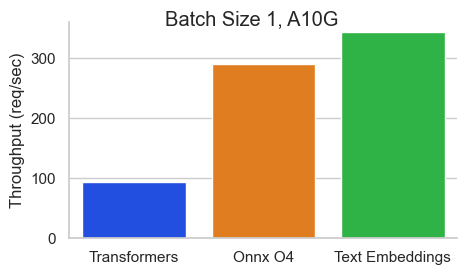

"为了使这个过程更快,我将使用 [Text Embeddings Inference](https://github.com/huggingface/text-embeddings-inference) 镜像。这有许多好处,比如:\n",

"- 无需模型图编译步骤\n",

"- Docker 镜像小,启动时间快。真正的无服务器!\n",

"- 基于 token 的动态批处理\n",

"- 使用 Flash 注意力机制、Candle 和 cuBLASLt 优化的 transformers 代码进行推理\n",

"- Safetensors 权重加载\n",

"- 生产就绪(使用 Open Telemetry 进行分布式跟踪,Prometheus 指标)\n",

"\n",

"\n",

""

]

},

{

"cell_type": "markdown",

"id": "3c830114-dd88-45a9-81b9-78b0e3da7384",

"metadata": {},

"source": [

"## 环境(Requirements)"

]

},

{

"cell_type": "code",

"execution_count": null,

"id": "35386f72-32cb-49fa-a108-3aa504e20429",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"!pip install -q aiohttp==3.8.3 datasets==2.14.6 pandas==1.5.3 requests==2.31.0 tqdm==4.66.1 huggingface-hub>=0.20"

]

},

{

"cell_type": "markdown",

"id": "b6f72042-173d-4a72-ade1-9304b43b528d",

"metadata": {},

"source": [

"## 导入包"

]

},

{

"cell_type": "code",

"execution_count": 3,

"id": "e2beecdd-d033-4736-bd45-6754ec53b4ac",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"import asyncio\n",

"from getpass import getpass\n",

"import json\n",

"from pathlib import Path\n",

"import time\n",

"from typing import Optional\n",

"\n",

"from aiohttp import ClientSession, ClientTimeout\n",

"from datasets import load_dataset, Dataset, DatasetDict\n",

"from huggingface_hub import notebook_login, create_inference_endpoint, list_inference_endpoints, whoami\n",

"import numpy as np\n",

"import pandas as pd\n",

"import requests\n",

"from tqdm.auto import tqdm"

]

},

{

"cell_type": "markdown",

"id": "5eece903-64ce-435d-a2fd-096c0ff650bf",

"metadata": {},

"source": [

"## 设置(Config)\n",

"`DATASET_IN` 你文本数据的位置\n",

"`DATASET_OUT` 你的嵌入储存的位置\n",

"\n",

"注意:我将 `MAX_WORKERS` 设置为 5,因为 `jina-embeddings-v2` 对内存的需求较大。"

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "df2f79f0-9f28-46e6-9fc7-27e9537ff5be",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"DATASET_IN = 'derek-thomas/dataset-creator-reddit-bestofredditorupdates'\n",

"DATASET_OUT = \"processed-subset-bestofredditorupdates\"\n",

"ENDPOINT_NAME = \"boru-jina-embeddings-demo-ie\"\n",

"\n",

"MAX_WORKERS = 5 # This is for how many async workers you want. Choose based on the model and hardware \n",

"ROW_COUNT = 100 # Choose None to use all rows, Im using 100 just for a demo"

]

},

{

"cell_type": "markdown",

"id": "1e680f3d-4900-46cc-8b49-bb6ba3e27e2b",

"metadata": {},

"source": [

"Hugging Face 在推理端点中提供了多种 GPU 供选择。下面以表格形式呈现:\n",

"\n",

"| GPU | 实例类型 | 实例大小 | vRAM |\n",

"|---------------------|----------------|--------------|-------|\n",

"| 1x Nvidia Tesla T4 | g4dn.xlarge | small | 16GB |\n",

"| 4x Nvidia Tesla T4 | g4dn.12xlarge | large | 64GB |\n",

"| 1x Nvidia A10G | g5.2xlarge | medium | 24GB |\n",

"| 4x Nvidia A10G | g5.12xlarge | xxlarge | 96GB |\n",

"| 1x Nvidia A100* | p4de | xlarge | 80GB |\n",

"| 2x Nvidia A100* | p4de | 2xlarge | 160GB |\n",

"\n",

"\\*注意,对于 A100 的机型你需要发邮件给我们来获取权限。"

]

},

{

"cell_type": "code",

"execution_count": 4,

"id": "3c2106c1-2e5a-443a-9ea8-a3cd0e9c5a94",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"# GPU Choice\n",

"VENDOR=\"aws\"\n",

"REGION=\"us-east-1\"\n",

"INSTANCE_SIZE=\"medium\"\n",

"INSTANCE_TYPE=\"g5.2xlarge\""

]

},

{

"cell_type": "code",

"execution_count": 5,

"id": "0ca1140c-3fcc-4b99-9210-6da1505a27b7",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "ee80821056e147fa9cabf30f64dc85a8",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

"VBox(children=(HTML(value='<center> <img\\nsrc=https://huggingface.co/front/assets/huggingface_logo-noborder.sv…"

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"notebook_login()"

]

},

{

"cell_type": "markdown",

"id": "5f4ba0a8-0a6c-4705-a73b-7be09b889610",

"metadata": {},

"source": [

"有些用户可能会在组织中注册支付信息。这肯能会使你的支付方式链接组织。\n",

"\n",

"如果你想使用你自己的用户名,请将其留空。"

]

},

{

"cell_type": "code",

"execution_count": 6,

"id": "88cdbd73-5923-4ae9-9940-b6be935f70fa",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"What is your Hugging Face 🤗 username or organization? (with an added payment method) ········\n"

]

}

],

"source": [

"who = whoami()\n",

"organization = getpass(prompt=\"What is your Hugging Face 🤗 username or organization? (with an added payment method)\")\n",

"\n",

"namespace = organization or who['name']"

]

},

{

"cell_type": "markdown",

"id": "b972a719-2aed-4d2e-a24f-fae7776d5fa4",

"metadata": {},

"source": [

"## 获取数据"

]

},

{

"cell_type": "code",

"execution_count": 7,

"id": "27835fa4-3a4f-44b1-a02a-5e31584a1bba",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "4041cedd3b3f4f8db3e29ec102f46a3a",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

"Downloading readme: 0%| | 0.00/1.73k [00:00<?, ?B/s]"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"text/plain": [

"Dataset({\n",

" features: ['id', 'content', 'score', 'date_utc', 'title', 'flair', 'poster', 'permalink', 'new', 'updated'],\n",

" num_rows: 10042\n",

"})"

]

},

"execution_count": 7,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"dataset = load_dataset(DATASET_IN)\n",

"dataset['train']"

]

},

{

"cell_type": "code",

"execution_count": 8,

"id": "8846087e-4d0d-4c0e-8aeb-ea95d9e97126",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"text/plain": [

"(100,\n",

" {'id': '10004zw',\n",

" 'content': '[removed]',\n",

" 'score': 1,\n",

" 'date_utc': Timestamp('2022-12-31 18:16:22'),\n",

" 'title': 'To All BORU contributors, Thank you :)',\n",

" 'flair': 'CONCLUDED',\n",

" 'poster': 'IsItAcOnSeQuEnCe',\n",

" 'permalink': '/r/BestofRedditorUpdates/comments/10004zw/to_all_boru_contributors_thank_you/',\n",

" 'new': False,\n",

" 'updated': False})"

]

},

"execution_count": 8,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"documents = dataset['train'].to_pandas().to_dict('records')[:ROW_COUNT]\n",

"len(documents), documents[0]"

]

},

{

"cell_type": "markdown",

"id": "93096cbc-81c6-4137-a283-6afb0f48fbb9",

"metadata": {},

"source": [

"# 推理端点\n",

"## 创建推理端点\n",

"\n",

"我们将使用 [API](https://huggingface.co/docs/inference-endpoints/api_reference) 来创建一个 [推理端点](https://huggingface.co/inference-endpoints)。主要有以下几个好处:\n",

"- 方便(无需点击)\n",

"- 可重复(我们有代码可以轻松运行它)\n",

"- 更便宜(无需花费时间等待加载,并且可以自动关闭)\n",

"\n"

]

},

{

"cell_type": "code",

"execution_count": 9,

"id": "9e59de46-26b7-4bb9-bbad-8bba9931bde7",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"try:\n",

" endpoint = create_inference_endpoint(\n",

" ENDPOINT_NAME,\n",

" repository=\"jinaai/jina-embeddings-v2-base-en\",\n",

" revision=\"7302ac470bed880590f9344bfeee32ff8722d0e5\",\n",

" task=\"sentence-embeddings\",\n",

" framework=\"pytorch\",\n",

" accelerator=\"gpu\",\n",

" instance_size=INSTANCE_SIZE,\n",

" instance_type=INSTANCE_TYPE,\n",

" region=REGION,\n",

" vendor=VENDOR,\n",

" namespace=namespace,\n",

" custom_image={\n",

" \"health_route\": \"/health\",\n",

" \"env\": {\n",

" \"MAX_BATCH_TOKENS\": str(MAX_WORKERS * 2048),\n",

" \"MAX_CONCURRENT_REQUESTS\": \"512\",\n",

" \"MODEL_ID\": \"/repository\"\n",

" },\n",

" \"url\": \"ghcr.io/huggingface/text-embeddings-inference:0.5.0\",\n",

" },\n",

" type=\"protected\",\n",

" )\n",

"except:\n",

" endpoint = [ie for ie in list_inference_endpoints(namespace=namespace) if ie.name == ENDPOINT_NAME][0]\n",

" print('Loaded endpoint')"

]

},

{

"cell_type": "markdown",

"id": "0f2c97dc-34e8-49e9-b60e-f5b7366294c0",

"metadata": {},

"source": [

"这里有几个设计选择:\n",

"- 像之前所说,我们使用 `jinaai/jina-embeddings-v2-base-en` 作为我们的模型。\n",

" - 为了可复现性,我们将它固定到一个特定的修订版本。\n",

"- 如果你对更多模型感兴趣,可以查看支持[列表](https://huggingface.co/docs/text-embeddings-inference/supported_models)。\n",

" - 请注意,大多数嵌入模型都是基于 BERT 架构的。\n",

"- `MAX_BATCH_TOKENS` 是根据我们的工作数量和嵌入模型的上下文窗口来选择的。\n",

"- `type=\"protected\"` 利用的是推理端点详细说明的安全功能。\n",

"- 我使用 **1x Nvidia A10**,因为 `jina-embeddings-v2` 对内存的需求很大(记住 8k 的上下文长度)。\n",

"- 如果你有高工作负载的需求,你应该考虑进一步调整 `MAX_BATCH_TOKENS` 和 `MAX_CONCURRENT_REQUESTS`。\n"

]

},

{

"cell_type": "markdown",

"id": "96d173b2-8980-4554-9039-c62843d3fc7d",

"metadata": {},

"source": [

"## 等待直到它运行起来"

]

},

{

"cell_type": "code",

"execution_count": 10,

"id": "5f3a8bd2-753c-49a8-9452-899578beddc5",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"CPU times: user 48.1 ms, sys: 15.7 ms, total: 63.8 ms\n",

"Wall time: 52.6 s\n"

]

},

{

"data": {

"text/plain": [

"InferenceEndpoint(name='boru-jina-embeddings-demo-ie', namespace='HF-test-lab', repository='jinaai/jina-embeddings-v2-base-en', status='running', url='https://k7l1xeok1jwnpbx5.us-east-1.aws.endpoints.huggingface.cloud')"

]

},

"execution_count": 10,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"%%time\n",

"endpoint.wait()"

]

},

{

"cell_type": "markdown",

"id": "a906645e-60de-4eb6-b8b6-3ec98a9d9b00",

"metadata": {},

"source": [

"当我们使用 `endpoint.client.post` 时,我们得到一个字节字符串。这有点繁琐,因为我们需要将这个字节字符串转换为一个 `np.array`,但这只是 Python 中的几行快速代码。"

]

},

{

"cell_type": "code",

"execution_count": 12,

"id": "e09253d5-70ff-4d0e-8888-0022ce0adf7b",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"text/plain": [

"array([-0.05630935, -0.03560849, 0.02789049, 0.02792823, -0.02800371,\n",

" -0.01530391, -0.01863454, -0.0077982 , 0.05374297, 0.03672185,\n",

" -0.06114018, -0.06880157, -0.0093503 , -0.03174005, -0.03206085,\n",

" 0.0610647 , 0.02243694, 0.03217408, 0.04181686, 0.00248854])"

]

},

"execution_count": 12,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"response = endpoint.client.post(json={\"inputs\": 'This sound track was beautiful! It paints the senery in your mind so well I would recomend it even to people who hate vid. game music!', 'truncate': True}, task=\"feature-extraction\")\n",

"response = np.array(json.loads(response.decode()))\n",

"response[0][:20]"

]

},

{

"cell_type": "markdown",

"id": "0d024788-6e6e-4a8d-b192-36ee3dacca13",

"metadata": {},

"source": [

"你可能遇到超过上下文长度的输入。在这种情况下,需要你来处理它们。在我的情况下,我更愿意截断而不是出现错误。让我们测试一下这是否有效。\n"

]

},

{

"cell_type": "code",

"execution_count": 13,

"id": "a4a1cd15-dda3-4cfa-8bda-788d8c1b9e32",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"The length of the embedding_input is: 300000\n"

]

},

{

"data": {

"text/plain": [

"array([-0.03088215, -0.0351537 , 0.05749275, 0.00983467, 0.02108356,\n",

" 0.04539965, 0.06107162, -0.02536954, 0.03887688, 0.01998681,\n",

" -0.05391388, 0.01529677, -0.1279156 , 0.01653782, -0.01940958,\n",

" 0.0367411 , 0.0031748 , 0.04716022, -0.00713609, -0.00155313])"

]

},

"execution_count": 13,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"embedding_input = 'This input will get multiplied' * 10000\n",

"print(f'The length of the embedding_input is: {len(embedding_input)}')\n",

"response = endpoint.client.post(json={\"inputs\": embedding_input, 'truncate': True}, task=\"feature-extraction\")\n",

"response = np.array(json.loads(response.decode()))\n",

"response[0][:20]"

]

},

{

"cell_type": "markdown",

"id": "f7186126-ef6a-47d0-b158-112810649cd9",

"metadata": {},

"source": [

"# 获取嵌入"

]

},

{

"cell_type": "markdown",

"id": "1dadfd68-6d46-4ce8-a165-bfeb43b1f114",

"metadata": {},

"source": [

"在这里,我发送一个文档,用嵌入更新它,然后返回它。这是与 `MAX_WORKERS` 并行的发生的。"

]

},

{

"cell_type": "code",

"execution_count": 14,

"id": "ad3193fb-3def-42a8-968e-c63f2b864ca8",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"async def request(document, semaphore):\n",

" # Semaphore guard\n",

" async with semaphore:\n",

" result = await endpoint.async_client.post(json={\"inputs\": document['content'], 'truncate': True}, task=\"feature-extraction\")\n",

" result = np.array(json.loads(result.decode()))\n",

" document['embedding'] = result[0] # Assuming the API's output can be directly assigned\n",

" return document\n",

"\n",

"async def main(documents):\n",

" # Semaphore to limit concurrent requests. Adjust the number as needed.\n",

" semaphore = asyncio.BoundedSemaphore(MAX_WORKERS)\n",

"\n",

" # Creating a list of tasks\n",

" tasks = [request(document, semaphore) for document in documents]\n",

" \n",

" # Using tqdm to show progress. It's been integrated into the async loop.\n",

" for f in tqdm(asyncio.as_completed(tasks), total=len(documents)):\n",

" await f"

]

},

{

"cell_type": "code",

"execution_count": 15,

"id": "ec4983af-65eb-4841-808a-3738fb4d682d",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "48a2affdee8d46f3b0c1f691eaac4b89",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

" 0%| | 0/100 [00:00<?, ?it/s]"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"name": "stdout",

"output_type": "stream",

"text": [

"Embeddings = 100 documents = 100\n",

"0 min 21.33 sec\n"

]

}

],

"source": [

"start = time.perf_counter()\n",

"\n",

"# Get embeddings\n",

"await main(documents)\n",

"\n",

"# Make sure we got it all\n",

"count = 0\n",

"for document in documents:\n",

" if 'embedding' in document.keys() and len(document['embedding']) == 768:\n",

" count += 1\n",

"print(f'Embeddings = {count} documents = {len(documents)}')\n",

"\n",

" \n",

"# Print elapsed time\n",

"elapsed_time = time.perf_counter() - start\n",

"minutes, seconds = divmod(elapsed_time, 60)\n",

"print(f\"{int(minutes)} min {seconds:.2f} sec\")"

]

},

{

"cell_type": "markdown",

"id": "bab97c7b-7bac-4bf5-9752-b528294dadc7",

"metadata": {},

"source": [

"## 暂停推理端点\n",

"\n",

"现在我们已经完成了嵌入,让我们暂停端点,以免产生任何额外费用,同时这也允许我们分析成本。"

]

},

{

"cell_type": "code",

"execution_count": 16,

"id": "540a0978-7670-4ce3-95c1-3823cc113b85",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Endpoint Status: paused\n"

]

}

],

"source": [

"endpoint = endpoint.pause()\n",

"\n",

"print(f\"Endpoint Status: {endpoint.status}\")"

]

},

{

"cell_type": "markdown",

"id": "45ad65b7-3da2-4113-9b95-8fb4e21ae793",

"metadata": {},

"source": [

"# 将更新后的数据集推送到 Hub\n",

"现在我们的文档已经更新了我们想要的嵌入。首先我们需要将其转换回 `Dataset` 格式。我发现从字典列表 -> `pd.DataFrame` -> `Dataset` 这条路径最为简单。\n"

]

},

{

"cell_type": "code",

"execution_count": 17,

"id": "9bb993f8-d624-4192-9626-8e9ed9888a1b",

"metadata": {

"tags": []

},

"outputs": [],

"source": [

"df = pd.DataFrame(documents)\n",

"dd = DatasetDict({'train': Dataset.from_pandas(df)})"

]

},

{

"cell_type": "markdown",

"id": "129760c8-cae1-4b1e-8216-f5152df8c536",

"metadata": {},

"source": [

"我默认将其上传到用户的账户(而不是上传到组织),但你可以通过在 `repo_id` 中设置用户或在配置中通过设置 `DATASET_OUT` 来自由推送到任何你想要的地方。\n"

]

},

{

"cell_type": "code",

"execution_count": 18,

"id": "f48e7c55-d5b7-4ed6-8516-272ae38716b1",

"metadata": {

"tags": []

},

"outputs": [

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "d3af2e864770481db5adc3968500b5d3",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

"Pushing dataset shards to the dataset hub: 0%| | 0/1 [00:00<?, ?it/s]"

]

},

"metadata": {},

"output_type": "display_data"

},

{

"data": {

"application/vnd.jupyter.widget-view+json": {

"model_id": "4e063c42d8f4490c939bc64e626b507a",

"version_major": 2,

"version_minor": 0

},

"text/plain": [

"Downloading metadata: 0%| | 0.00/823 [00:00<?, ?B/s]"

]

},

"metadata": {},

"output_type": "display_data"

}

],

"source": [

"dd.push_to_hub(repo_id=DATASET_OUT)"

]

},

{

"cell_type": "code",

"execution_count": 19,

"id": "85ea2244-a4c6-4f04-b187-965a2fc356a8",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Dataset is at https://huggingface.co/datasets/derek-thomas/processed-subset-bestofredditorupdates\n"

]

}

],

"source": [

"print(f'Dataset is at https://huggingface.co/datasets/{who[\"name\"]}/{DATASET_OUT}')"

]

},

{

"cell_type": "markdown",

"id": "41abea64-379d-49de-8d9a-355c2f4ce1ac",

"metadata": {},

"source": [

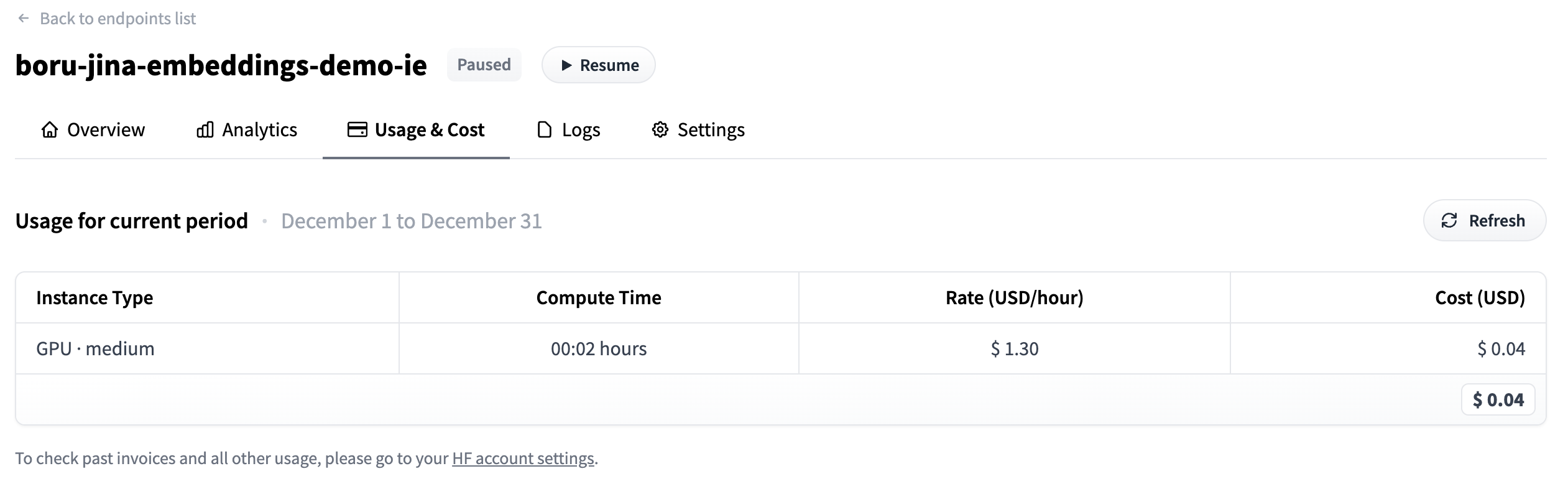

"# 分析使用情况\n",

"1. 前往下面打印的 `dashboard_url`\n",

"2. 点击使用与成本 (Usage & Cost) 标签\n",

"3. 查看你已经花费了多少"

]

},

{

"cell_type": "code",

"execution_count": 20,

"id": "16815445-3079-43da-b14e-b54176a07a62",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"https://ui.endpoints.huggingface.co/HF-test-lab/endpoints/boru-jina-embeddings-demo-ie\n"

]

}

],

"source": [

"dashboard_url = f'https://ui.endpoints.huggingface.co/{namespace}/endpoints/{ENDPOINT_NAME}'\n",

"print(dashboard_url)"

]

},

{

"cell_type": "code",

"execution_count": 21,

"id": "81096c6f-d12f-4781-84ec-9066cfa465b3",

"metadata": {},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Hit enter to continue with the notebook \n"

]

},

{

"data": {

"text/plain": [

"''"

]

},

"execution_count": 21,

"metadata": {},

"output_type": "execute_result"

}

],

"source": [

"input(\"Hit enter to continue with the notebook\")"

]

},

{

"cell_type": "markdown",

"id": "847d524e-9aa6-4a6f-a275-8a552e289818",

"metadata": {},

"source": [

"我们可以看到只花了 `$0.04` !\n"

]

},

{

"cell_type": "markdown",

"id": "b953d5be-2494-4ff8-be42-9daf00c99c41",

"metadata": {},

"source": [

"\n",

"# 删除端点\n",

"现在我们已经完成了,不再需要我们的端点了。我们可以以编程方式删除端点。\n",

"\n",

""

]

},

{

"cell_type": "code",

"execution_count": 22,

"id": "c310c0f3-6f12-4d5c-838b-3a4c1f2e54ad",

"metadata": {

"tags": []

},

"outputs": [

{

"name": "stdout",

"output_type": "stream",

"text": [

"Endpoint deleted successfully\n"

]

}

],

"source": [

"endpoint = endpoint.delete()\n",

"\n",

"if not endpoint:\n",

" print('Endpoint deleted successfully')\n",

"else:\n",

" print('Delete Endpoint in manually') "

]

}

],

"metadata": {

"kernelspec": {

"display_name": "Python 3 (ipykernel)",

"language": "python",

"name": "python3"

},

"language_info": {

"codemirror_mode": {

"name": "ipython",

"version": 3

},

"file_extension": ".py",

"mimetype": "text/x-python",

"name": "python",

"nbconvert_exporter": "python",

"pygments_lexer": "ipython3",

"version": "3.10.8"

}

},

"nbformat": 4,

"nbformat_minor": 5

}